Random matrix

In probability theory and mathematical physics, a random matrix is a matrix-valued random variable. Many important properties of physical systems can be represented mathematically as matrix problems. For example, the thermal conductivity of a lattice can be computed from the dynamical matrix of the particle-particle interactions within the lattice.

Contents |

Motivation

Physics

In nuclear physics, random matrices were introduced by Eugene Wigner[1] to model the spectra of heavy atoms. He postulated that the spacings between the lines in the spectrum of a heavy atom should resemble the spacings between the eigenvalues of a random matrix, and should depend only on the symmetry class of the underlying evolution.[2] In solid-state physics, random matrices model the behaviour of large disordered Hamiltonians in the mean field approximation.

In quantum chaos, the Bohigas–Giannoni–Schmit (BGS) conjecture[3] asserts that the spectral statistics of quantum systems whose classical counterparts exhibit chaotic behaviour are described by random matrix theory.

Random matrix theory has also found applications to quantum gravity in two dimensions,[4] mesoscopic physics,[5] and more[6][7][8][9][10]

Mathematical statistics and numerical analysis

In multivariate statistics, random matrices were introduced by John Wishart for statistical analysis of large samples;[11] see estimation of covariance matrices.

Significant results have been shown that extend the classical scalar Chernoff, Bernstein, and Hoeffding inequalities to the largest eigenvalues of finite sums of random Hermitian matrices.[12] Corollary results are derived for the maximum singular values of rectangular matrices.

In numerical analysis, random matrices have been used since the work of John von Neumann and Herman Goldstine[13] to describe computation errors in operations such as matrix multiplication. See also[14] for more recent results.

Number theory

In number theory, the distribution of zeros of the Riemann zeta function (and other L-functions) is modelled by the distribution of eigenvalues of certain random matrices.[15] The connection was first discovered by Hugh Montgomery and Freeman J. Dyson. It is connected to the Hilbert–Pólya conjecture.

Gaussian ensembles

The most studied random matrix ensembles are the Gaussian ensembles.

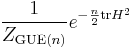

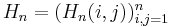

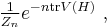

The Gaussian unitary ensemble GUE(n) is described by the Gaussian measure with density

on the space of n × n Hermitian matrices H = (Hij)n

i,j=1. Here ZGUE(n) = 2n/2 πn2/2 is a normalisation constant, chosen so that the integral of the density is equal to one. The term unitary refers to the fact that the distribution is invariant under unitary conjugation. The Gaussian unitary ensemble models Hamiltonians lacking time-reversal symmetry.

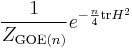

The Gaussian orthogonal ensemble GOE(n) is described by the Gaussian measure with density

on the space of n × n real symmetric matrices H = (Hij)n

i,j=1. Its distribution is invariant under orthogonal conjugation, and it models Hamiltonians with time-reversal symmetry.

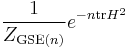

The Gaussian symplectic ensemble GSE(n) is described by the Gaussian measure with density

on the space of n × n quaternionic Hermitian matrices H = (Hij)n

i,j=1. Its distribution is invariant under conjugation by the symplectic group, and it models Hamiltonians with time-reversal symmetry but no rotational symmetry.

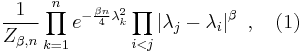

The joint probability density for the eigenvalues λ1,λ2,...,λn of GUE/GOE/GSE is given by

where β = 1 for GOE, β = 2 for GUE, and β = 4 for GSE; Zβ,n is a normalisation constant which can be explicitly computed, see Selberg integral. In the case of GUE (β = 2), the formula (1) describes a determinantal point process.

Generalisations

Wigner matrices are random Hermitian matrices  such that the entries

such that the entries

above the main diagonal are independent random variables with zero mean, and

have identical second moments.

Invariant matrix ensembles are random Hermitian matrices with density on the space of real symmetric/ Hermitian/ quaternionic Hermitian matrices, which is of the form  where the function V is called the potential.

where the function V is called the potential.

The Gaussian ensembles are the only common special cases of these two classes of random matrices.

Spectral theory of random matrices

The spectral theory of random matrices studies the distribution of the eigenvalues as the size of the matrix goes to infinity.

Global regime

In the global regime, one is interested in the distribution of linear statistics of the form Nf, H = n-1 tr f(H).

Empirical spectral measure

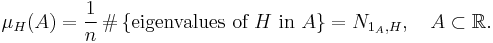

The empirical spectral measure μH of H is defined by

Usually, the limit of  is a deterministic measure; this is a particular case of self-averaging. The cumulative distribution function of the limiting measure is called the integrated density of states and is denoted N(λ). If the integrated density of states is differentiable, its derivative is called the density of states and is denoted ρ(λ).

is a deterministic measure; this is a particular case of self-averaging. The cumulative distribution function of the limiting measure is called the integrated density of states and is denoted N(λ). If the integrated density of states is differentiable, its derivative is called the density of states and is denoted ρ(λ).

The limit of the empirical spectral measure for Wigner matrices was described by Eugene Wigner, see Wigner's law. A more general theory was developed by Marchenko and Pastur [16][17]

The limit of the empirical spectral measure of invariant matrix ensembles is described by a certain integral equation which arises from potential theory.[18]

Fluctuations

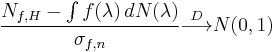

For the linear statistics Nf,H = n−1 ∑ f(λj), one is also interested in the fluctuations about ∫ f(λ) dN(λ). For many classes of random matrices, a central limit theorem of the form

Local regime

In the local regime, one is interested in the spacings between eigenvalues, and, more generally, in the joint distribution of eigenvalues in an interval of length of order 1/n. One distinguishes between bulk statistics, pertaining to intervals inside the support of the limiting spectral measure, and edge statistics, pertaining to intervals near the boundary of the support.

Bulk statistics

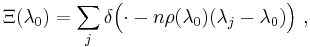

Formally, fix λ0 in the interior of the support of N(λ). Then consider the point process

where λj are the eigenvalues of the random matrix.

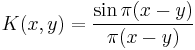

The point process Ξ(λ0) captures the statistical properties of eigenvalues in the vicinity of λ0. For the Gaussian ensembles, the limit of Ξ(λ0) is known;[2] thus, for GUE it is a determinantal point process with the kernel

(the sine kernel).

The universality principle postulates that the limit of Ξ(λ0) as n → ∞ should depend only on the symmetry class of the random matrix (and neither on the specific model of random matrices nor on λ0). This was rigorously proved for several models of random matrices: for invariant matrix ensembles,[21][22] for Wigner matrices,[23][24] et cet.

Edge statistics

Other classes of random matrices

Wishart matrices

Wishart matrices are n × n random matrices of the form H = X X*, where X is an n × n random matrix with independent entries, and X* is its conjugate matrix. In the important special case considered by Wishart, the entries of X are identically distributed Gaussian random variables (either real or complex).

The limit of the empirical spectral measure of Wishart matrices was found[16] by Vladimir Marchenko and Leonid Pastur, see Marchenko–Pastur distribution.

Random unitary matrices

Non-Hermitian random matrices

See circular law.

Guide to references

- Books on random matrix theory:[2][25]

- Survey articles on random matrix theory:[14][17][26][27]

- Historic works:[1][11][13]

References

- ^ a b Wigner, E. (1955). "Characteristic vectors of bordered matrices with infinite dimensions". Ann. Of Math. 62 (3): 548–564. doi:10.2307/1970079.

- ^ a b c Mehta, M.L. (2004). Random Matrices. Amsterdam: Elsevier/Academic Press. ISBN 0-120-88409-7.

- ^ Bohigas, O.; Giannoni, M.J.; Schmit, Schmit (1984). "Characterization of Chaotic Quantum Spectra and Universality of Level Fluctuation Laws". Phys. Rev. Lett. 52: 1–4. Bibcode 1984PhRvL..52....1B. doi:10.1103/PhysRevLett.52.1. http://link.aps.org/doi/10.1103/PhysRevLett.52.1.

- ^ Franchini F, Kravtsov VE (October 2009). "Horizon in random matrix theory, the Hawking radiation, and flow of cold atoms". Phys. Rev. Lett. 103 (16): 166401. Bibcode 2009PhRvL.103p6401F. doi:10.1103/PhysRevLett.103.166401. PMID 19905710. http://link.aps.org/doi/10.1103/PhysRevLett.103.166401.

- ^ Sánchez D, Büttiker M (September 2004). "Magnetic-field asymmetry of nonlinear mesoscopic transport". Phys. Rev. Lett. 93 (10): 106802. arXiv:cond-mat/0404387. Bibcode 2004PhRvL..93j6802S. doi:10.1103/PhysRevLett.93.106802. PMID 15447435. http://link.aps.org/doi/10.1103/PhysRevLett.93.106802.

- ^ Rychkov VS, Borlenghi S, Jaffres H, Fert A, Waintal X (August 2009). "Spin torque and waviness in magnetic multilayers: a bridge between Valet-Fert theory and quantum approaches". Phys. Rev. Lett. 103 (6): 066602. Bibcode 2009PhRvL.103f6602R. doi:10.1103/PhysRevLett.103.066602. PMID 19792592. http://link.aps.org/doi/10.1103/PhysRevLett.103.066602.

- ^ Callaway DJE (April 1991). "Random matrices, fractional statistics, and the quantum Hall effect". Phys. Rev., B Condens. Matter 43 (10): 8641–8643. Bibcode 1991PhRvB..43.8641C. doi:10.1103/PhysRevB.43.8641. PMID 9996505. http://link.aps.org/doi/10.1103/PhysRevB.43.8641.

- ^ Janssen M, Pracz K (June 2000). "Correlated random band matrices: localization-delocalization transitions". Phys Rev E Stat Phys Plasmas Fluids Relat Interdiscip Topics 61 (6 Pt A): 6278–86. arXiv:cond-mat/9911467. Bibcode 2000PhRvE..61.6278J. doi:10.1103/PhysRevE.61.6278. PMID 11088301. http://link.aps.org/doi/10.1103/PhysRevE.61.6278.

- ^ Zumbühl DM, Miller JB, Marcus CM, Campman K, Gossard AC (December 2002). "Spin-orbit coupling, antilocalization, and parallel magnetic fields in quantum dots". Phys. Rev. Lett. 89 (27): 276803. arXiv:cond-mat/0208436. Bibcode 2002PhRvL..89A6803Z. doi:10.1103/PhysRevLett.89.276803. PMID 12513231. http://link.aps.org/doi/10.1103/PhysRevLett.89.276803.

- ^ Bahcall SR (December 1996). "Random Matrix Model for Superconductors in a Magnetic Field". Phys. Rev. Lett. 77 (26): 5276–5279. arXiv:cond-mat/9611136. Bibcode 1996PhRvL..77.5276B. doi:10.1103/PhysRevLett.77.5276. PMID 10062760. http://link.aps.org/doi/10.1103/PhysRevLett.77.5276.

- ^ a b Wishart, J. (1928). "Generalized product moment distribution in samples". Biometrika 20A (1–2): 32–52.

- ^ Tropp, J. (2011). "User-Friendly Tail Bounds for Sums of Random Matrices". Foundations of Computational Mathematics. doi:10.1007/s10208-011-9099-z.

- ^ a b von Neumann, J.; Goldstine, H.H. (1947). "Numerical inverting of matrices of high order". Bull. Amer. Math. Soc. 53 (11): 1021–1099. doi:10.1090/S0002-9904-1947-08909-6.

- ^ a b Edelman, A.; Rao, N.R (2005). "Random matrix theory". Acta Numer. 14: 233–297. doi:10.1017/S0962492904000236.

- ^ Keating, Jon (1993). "The Riemann zeta-function and quantum chaology". Proc. Internat. School of Phys. Enrico Fermi CXIX: 145–185.

- ^ a b .Marčenko, V A; Pastur, L A (1967). "Distribution of eigenvalues for some sets of random matrices". Mathematics of the USSR-Sbornik 1 (4): 457–483. Bibcode 1967SbMat...1..457M. doi:10.1070/SM1967v001n04ABEH001994. http://iopscience.iop.org/0025-5734/1/4/A01;jsessionid=F84D52B000FBFEFEF32CCB5FD3CEF2A1.c1.

- ^ a b Pastur, L.A. (1973). "Spectra of random self-adjoint operators". Russ. Math. Surv. 28 (1): 1−67. Bibcode 1973RuMaS..28....1P. doi:10.1070/RM1973v028n01ABEH001396.

- ^ Pastur, L.; Shcherbina, M.; Shcherbina, M. (1995). "On the Statistical Mechanics Approach in the Random Matrix Theory: Integrated Density of States". J. Stat. Phys. 79 (3–4): 585−611. Bibcode 1995JSP....79..585D. doi:10.1007/BF02184872.

- ^ Johansson, K. (1998). "On fluctuations of eigenvalues of random Hermitian matrices". Duke Math. J. 91 (1): 151–204. doi:10.1215/S0012-7094-98-09108-6.

- ^ Pastur, L.A. (2005). "A simple approach to the global regime of Gaussian ensembles of random matrices". Ukrainian Math. J. 57 (6): 936–966. doi:10.1007/s11253-005-0241-4.

- ^ Pastur, L.; Shcherbina, M. (1997). "Universality of the local eigenvalue statistics for a class of unitary invariant random matrix ensembles". J. Statist. Phys. 86 (1–2): 109−147. Bibcode 1997JSP....86..109P. doi:10.1007/BF02180200.

- ^ Deift, P.; Kriecherbauer, T.; McLaughlin, K.T.-R.; Venakides, S.; Zhou, X. (1997). "Asymptotics for polynomials orthogonal with respect to varying exponential weights". Internat. Math. Res. Notices 16 (16): 759−782. doi:10.1155/S1073792897000500.

- ^ Erdős, L.; Péché, S.; Ramírez, J.A.; Schlein, B.; Yau, H.T. (2010). "Bulk universality for Wigner matrices". Comm. Pure Appl. Math. 63 (7): 895–925.

- ^ Tao., T.; Vu, V. (2010). "Random matrices: universality of local eigenvalue statistics up to the edge". Comm. Math. Phys. 298 (2): 549−572. Bibcode 2010CMaPh.298..549T. doi:10.1007/s00220-010-1044-5.

- ^ Anderson, G.W.; Guionnet, A.; Zeitouni, O. (2010). An introduction to random matrices.. Cambridge: Cambridge University Press. ISBN 978-0-521-19452-5.

- ^ Diaconis, Persi (2003). "Patterns in eigenvalues: the 70th Josiah Willard Gibbs lecture". American Mathematical Society. Bulletin. New Series 40 (2): 155–178. doi:10.1090/S0273-0979-03-00975-3. MR1962294. http://www.ams.org/bull/2003-40-02/S0273-0979-03-00975-3/home.html.

- ^ Diaconis, Persi (2005). "What is ... a random matrix?". Notices of the American Mathematical Society 52 (11): 1348–1349. ISSN 0002-9920. MR2183871. http://www.ams.org/notices/200511/.

External links

- Fyodorov, Y. (2011). "Random matrix theory". Scholarpedia 6 (3): 9886. http://www.scholarpedia.org/article/Random_matrix_theory.

- Weisstein, E.W.. "Random Matrix". MathWorld--A Wolfram Web Resource. http://mathworld.wolfram.com/RandomMatrix.html.